Using AI to Caption My Japan Photos

A small experiment in automated storytelling

After my trip to Japan, I wanted to find a way to automatically generate meaningful captions for all of the photos our group took using AI. Here's how I did it.

The Photo Organization Problem

Our group came back from Japan with a thousand+ photos. While the images themselves were vivid reminders of the trip, I wanted to add context - where each photo was taken, what was happening in the scene, and interesting details that might not be immediately obvious. I also wanted to add a layer of interactivity to the website so people could click on photos and get more information about what was happening. Finally I wanted to extract 'Points of Interest' from each photo so I could highlight interesting cultural or historical details from the photos in a way that's standalone from the caption.

Manually writing captions and points of interest would have taken forever. So I turned to some modern tools for help.

The Technical Solution

Auto-captioning photos with AI isn't a new idea, but what made captioning vacation photos tricky was the need to add context to the prompts about where the photo was taken, when the photo was taken, who took it, who might be appearing in any given photo, and other general details about the vacation. So I built a pipeline using Claude, an AI model from Anthropic, to analyze each photo along with its metadata (and a map generated from the GPS coordinates) to generate captions which actually turned out pretty decent. Here's a deep dive into how it works:

The Process:

- A Python script extracts EXIF data from each photo, including GPS coordinates and timestamp

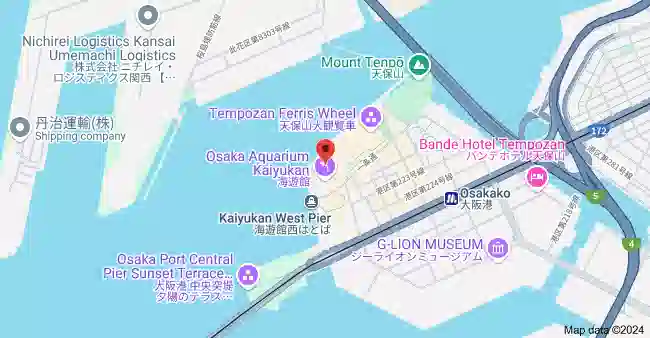

- For photos with GPS data, it generates a map using Google Maps via the SerpAPI

- The script sends both the photo and map (if available) to Claude

- Claude analyzes the images and generates a natural language description and points of interest

- The captions and points of interest are extracted using the Instructor library and stored in a SQLite database

- Everything gets stored in a SQLite database for the website to use.

Example of a generated map:

Sample map generated using SerpAPI for a photo's GPS coordinates

The AI Prompt

One of the most important parts of this project is the system prompt that tells Claude how to write the captions. Here's what it looks like:

You are creating captions for a travel blog called 'Dan in Japan' about a vacation taken by two couples in October 2024. Write natural, conversational captions in all lowercase that avoid clichés and overwrought emotional descriptions. You are being given a photo from the trip along with a screenshot of a map showing where the photo was taken using the serpAPI from the photo coordinates. The items identified in the map are for context only, it doesn't mean that the photo is exactly of something shown in the map. The photographer was {photographer} and the date it was taken was {date_taken} at {location_context}. If Chuck took the photo, then it's him and his wife Ashley. If Daniel took the photo, then it's him and his wife Christina, and vice-versa. Trip details: - First time in Japan - 10 days during second half of October 2024 - Visited Tokyo, Osaka, and Kyoto - Stayed in: - Tokyo (Airbnb) - Osaka (traditional Japanese house) - Kyoto (western hotel) - Any photos you see taken far outside of these cities would have been on the bullet train. Caption guidelines: - Always identify the people in the photo by name - Write as if texting a friend - casual and genuine - Avoid cliché travel writing phrases like "capturing the moment" or "radiating excitement" - No exclamation points unless absolutely necessary - Skip obvious details (we can see they're smiling/happy/etc) - Keep it to 1-2 short sentences - For US photos (pre/post trip), mention that it's from before/after the Japan trip Don't: - Use flowery or emotional language - Describe obvious visual elements - Add fictional details - Assume activities from map locations Your Points of Interest should be notable locations, cultural elements, or historical references that warrant further explanation in a modal window. Skip mundane points of interest. Each point of interest you generate is going to be clickable and its going to open a google search for the phrase you pick, so choose things that can be googled and are japan related. Here are the images to analyze:

After a lot of trial and error, I'm pretty happy with this prompt. The main issues that remain are when the vision model hallucinates details that aren't actually in the photos - something that can really only be fixed by using a more accurate vision model, rather than tweaking the prompts further.

The Results

Claude has proven surprisingly good at describing the photos. It notices architectural details, identifies cultural elements, and provides context about locations. While not perfect, it's much more detailed than what I could have written manually for thousand plus photos.

The Points of Interest Extraction

In addition to generating captions, the system incorporates the Instructor library to extract points of interest from each photo. This enables the website to highlight notable locations, cultural elements, or historical references found in the images.

The Instructor library is a lightweight Python library that simplifies extracting structured data from language models. In our case, we're using it to parse the AI's responses into a structured format containing the captions and points of interest.

Here's how I integrated it into the code:

from pydantic import BaseModel

from typing import List

class PointOfInterest(BaseModel):

name: str

description: str

class PhotoAnalysis(BaseModel):

caption: str

points_of_interest: List[PointOfInterest]

def generate_caption(...):

# Initialize Anthropic client with Instructor

anthropic_client = instructor.from_anthropic(

Anthropic(api_key=os.environ['ANTHROPIC_API_KEY'])

)

# Send the request to the AI model

response = anthropic_client.messages.create(

model='claude-3-5-sonnet-latest',

messages=[{'role': 'user', 'content': instruction_text}],

response_model=PhotoAnalysis,

temperature=0

)

# Extract caption and points of interest from the response

return response.caption, [poi.model_dump() for poi in response.points_of_interest]

By defining the expected response structure using Pydantic models, the Instructor library ensures that the data returned by the AI model is structured according to our specifications. This makes it easier to process and store the captions and points of interest in our database.

The Stack

- Backend: FastAPI + SQLite

- Frontend: Jinja2 Templates + Tailwind CSS

- Image Processing: Pillow (PIL) for EXIF data

- AI: Claude 3.5 Sonnet via Anthropic's API + Instructor

- Maps: Leaflet.js + OpenStreetMap

- Development: Cursor IDE

Also, nearly 100% of the code for the website was generated with AI using the Cursor IDE. All the code is available on GitHub if you're interested in the implementation details.

This project is an experiment in AI driven storytelling. While not perfect, it's an interesting way to share vacation photos with friends and family.